Introducing My Latest Projects

… Or, How to Procrastinate Productively.

I decided to make one of my current projects open source and post them on Google Code just for fun. The project is a tool chain that I’m using to remake Thrust. In reality, I decided to divide the project into two separate projects: the actual game engine (called klystron) and related tools, and a music editor that uses the engine.

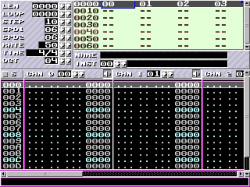

Here are two videos I made a while ago that demonstrate the engine. The first is the music editor (called klystrack) — it’s much less ugly at the moment but the sound synthesis is the same, and that’s what matters:

The sound engine (“Cyd”) is basically a very simple software synthesizer with capabilities comparable to the SID or any 8-bit machine from the 80s. The editor is a fairly standard tracker, much like GoatTracker.

The graphics half of the engine is basically a wrapper around a quite fast collision detection system (pixel-accurate, or it wouldn’t be much good for a thrustlike) built on SDL. It also does background collisions and drawing as well. As you may have guessed, the whole point is to provide a limited but still helpful set of routines that are useful for creating 2D games not unlike what video games were in 1991.

And, here’s a proof I’m actually working on the actual game (the sound effects are created in real time by the sound engine):

A note on Google Code: it’s rather nice. It provides the standard open source development stuff like source control an such but I really like how clean and hassle-free it is. Adding a project takes a minute and after that it’s simply coding and some quick documentation on the project wiki. The project wiki is good example of how simple but elegant the system is: the wiki pages actually exists inside the source control as files, just like your source code.

Go check Google Code out and while you’re at it, contribute on my projects. :)