Blog Experiment: Desaturating and resizing images

More from the muse called Referer:.

Resizing images

Resizing an image is an interesting subject. There are many ways to do it and you can also do the same thing in very different methods. Quickly summarized, I’d say resizing an image can be categorized like this:

- Enlarging

- Resampling

- Nearest neighbor (blocky)

- Linear interpolation (blurry)

- Spline interpolation (e.g. cubic, lanzcos etc., blurry, sometimes exaggerates contrast and makes edges stand out too much)

- Super-resolution (“CSI” style zooming)

- Resampling

- Shrinking

- Resampling

- Nearest neighbor (blocky)

- Linear interpolation (average)

- Spline interpolation (sometimes better than average, especially when the resolution isn’t for example, halved or divided by three)

- Re-targeting (“content aware resizing”, keeps important details unchanged)

- Resampling

Now, for usual resizing there are many libraries that do it for you. There is no point recreating the same algorithms over and over, considering you will most likely use either bi-linear interpolation, a spline algorithm or some special algorithm. When using SDL, I have used SDL_gfx to resize images both larger and smaller. It does most basic interpolation, for slight resizing it should be good enough.

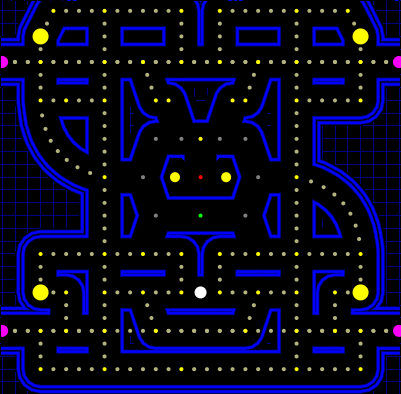

Another free piece of code for resizing is the Super Eagle algorithm which was specifically created for resizing emulator (Snes9x) graphics larger: it doesn’t blur edges and works quite well removing “jaggy” lines. It should be available for [your preferred graphics library], it has been adapted to so many projects that it is only very probable that there is an implementation floating around for you to steal.

Another free piece of code for resizing is the Super Eagle algorithm which was specifically created for resizing emulator (Snes9x) graphics larger: it doesn’t blur edges and works quite well removing “jaggy” lines. It should be available for [your preferred graphics library], it has been adapted to so many projects that it is only very probable that there is an implementation floating around for you to steal.

As for commercial implementations of super-resolution, this looks pretty awesome. As does this online bitmap image to vector image converter (vector images do not suffer of the loss of sharpness when enlarged).

Do keep in mind that you can also use the video card of your computer to scale images: most (pretty much all) computers have some 3D features which means they can be used to quickly draw an image on the screen in any dimensions you like, even if you don’t need interactivity. I use this method in Viewer2 and I found it easy to implement. Also, you can shrink images the same way, usually a graphic library will have a function that generates mipmaps which give very nice results when the drawn image is smaller than the original.

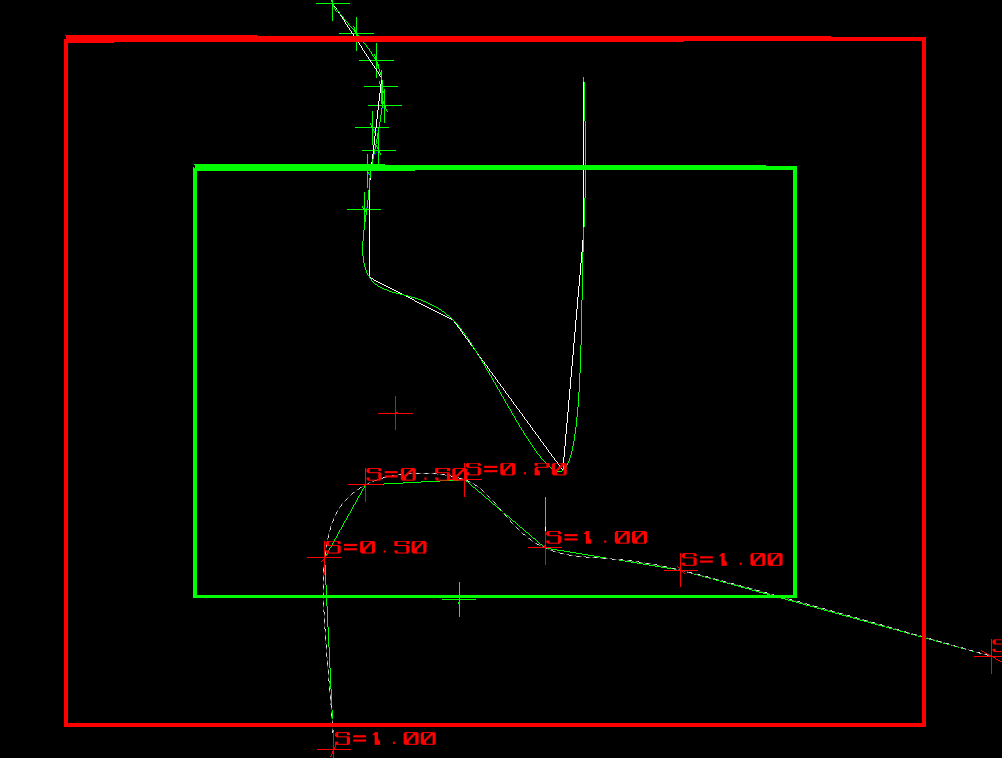

And now the reason why I started writing these (lame) blog entries: image re-targeting or seam carving. My implementation works somehow (mainly with cartoon style images with as little color gradients as possible) but there are many other implementations that are good.

- Liquid Rescale – seam carving for GIMP (open source, please, for the love of “Bob”, look into this source code rather than mine).

- A good list of different implementations – sadly with mine, too.

- Seam carving is as notable an algorithm that Wikipedia acknowledges its existence

Desaturating (greyscaling) images

Desaturating (i.e. making color images black and white) is a subject that interests a portion of the people who find their way on my site. While this is a trivial problem to solve in any programming language, there actually are some quirks to it. Here goes.

This is the easiest (and probably fastest) method:

Grey = (R + G + B) / 3;However, when speed is not important or if you simply are more pedantic than you are lazy, a more accurate mixing ratio is as follows:

Grey = 0.3 * R + 0.59 * G + 0.11 * B;This is because the human eye isn’t equally sensitive to all three color components. While those three ratios are the most often quoted, each eye probably needs a slightly tweaked mix. But the main idea is that we are able to see green much better than red, and red a bit better than blue.

Lossy image compression uses this fact to dedicate more bits to the colors to which the eye is more sensitive. Also, back when 24-bit resolutions weren’t quite yet available, the 15 or 16-bit modes often dedicated less bits to the blue component.

Worth noting is that if you want the correct algorithm to be fast, you will probably need to ditch the floating point operations and do the conversion with integers a bit like this:

Grey = (77 * R + 151 * G + 28 * B) / 256;In any proper language and with a decent compiler, the above should compile to code that doesn’t have a division but a bit shift for speed (2^8 = 256, shifting right by 8 bits is the same as dividing by 256). Though, if you do that, make sure your variables are wide enough so they won’t overflow.

Do keep in mind any modern processor has vector processing capabilities. That is, you desaturate multiple pixels in one operation. And, that in such case it could be possible to do the conversion quite fast with the first formula that uses floating points. Then again, if you are able to use such features, you probably didn’t need to read this.